The Killer App for Legal Education

This month, a startup called Midpage gave Claude direct access to real, citable, hyperlinked American case law—and the results are staggering. In this post, I walk through what happened when I connected Midpage's MCP integration to Claude Cowork and turned it loose on real teaching tasks: a grounded presentation on presidential war powers, an Eleventh Amendment problem set, a deep dive into the independent and adequate state grounds doctrine that produced a short law-review-style article, and a NextGen bar exam skill that generates constructed-response questions with grading rubrics—all anchored in full-text case law. I call the architecture behind this Grounded Open AI for Legal (GOAI4L), and I think it's the killer app for legal education. Read on to see why—and to see what it actually looks like in action.

Something happened this past month that deserves more attention than it has gotten in the legal education world. Midpage released an MCP integration for Claude. That sentence probably means nothing to most law professors, so let me translate: a startup with a comprehensive case law database just gave Claude—Anthropic's large language model—direct access to real, citable, hyperlinked American case law. And it didn't just make the capability available to the Claude chatbot with which many in legal education have familiarized themselves over the past few years. It didn't just make that capability available to Claude's Opus version 4.6, a language model with a context window of one million tokens that is probably the best in the business right now at text-based reasoning. It made full text legal sources available to Claude Cowork, which can orchestrate numerous AI agents running Opus 4.6 sequentially or in parallel and having access to a panoply of tools, including code. In short, it created Grounded Open Access Artificial Intelligence for Legal (GOAI4L), the Killer App.

But, as they say, don't tell them, show them. Here are sample projects that became possible this month due to the combination of Midpage with Claude Cowork.

A Grounded Presentation on Presidential War Powers

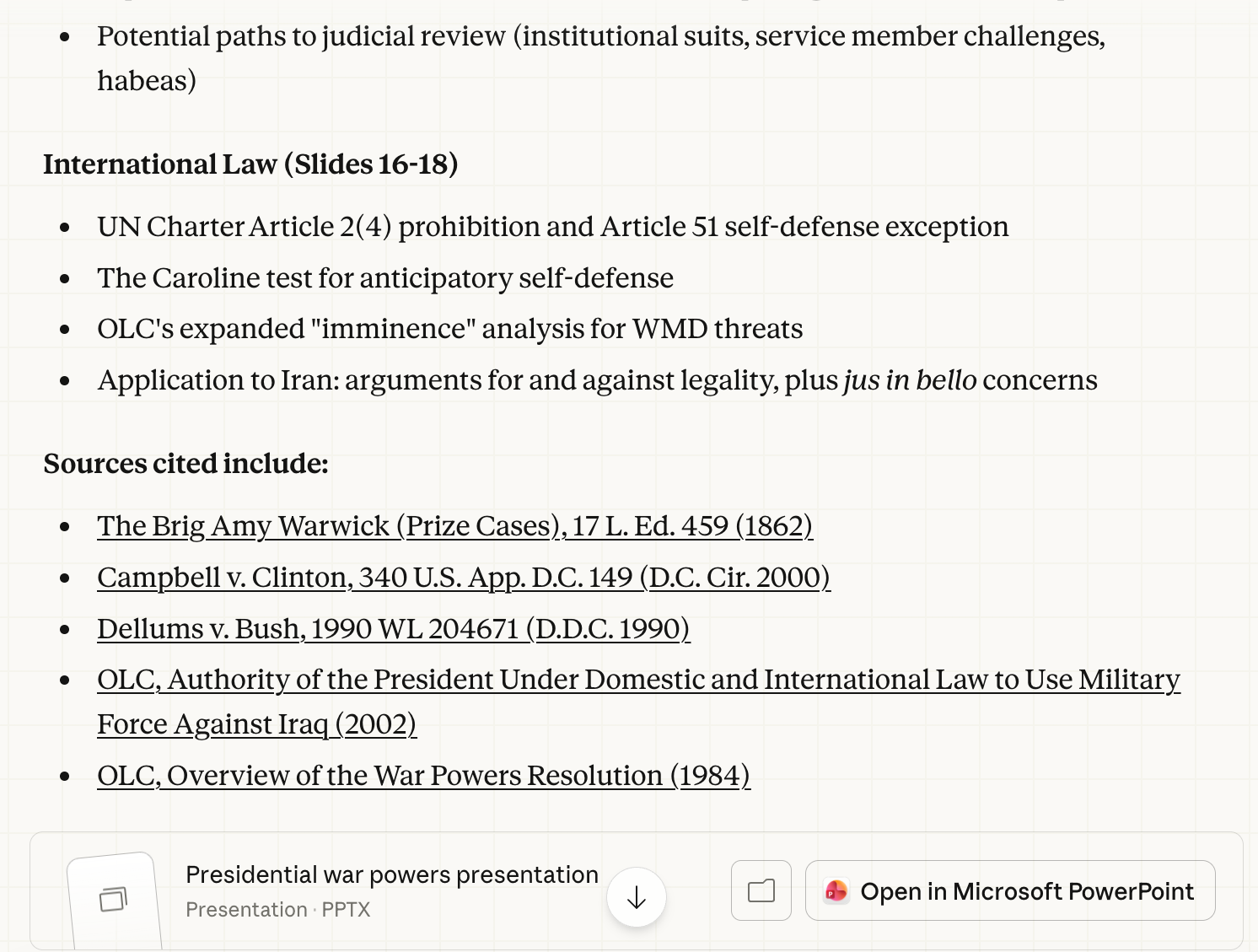

I wanted to have a canned presentation ready to go for my constitutional law class in the event of an American military intervention in Iran. To be sure, I could have had a discussion about the legal aspects of that contingency with any respectable AI; with a little guidance from me ("Here's the Prize Cases", "Consider this document asserting the War Powers Act is unconstitutional", "Create a beamer presentation of 20 slides based on the dialog we have had thus far"), I'm sure we could have generated something decent. Instead, however, I launched Claude Cowork with the Midpage AI MCP active and just asked the following:

I want you to imagine that sometime in the next week President Trump directs an attack on Iran, alleging that they were rebuilding their nuclear weapons program and a terror network. He also alleges they were about to attack us allies such as Israel. Assume the attack goes beyond facilities alleged to have been involved in those activities and seeks to kill Iranian leadership as well as its communications and control. Inevitably, some civilians are killed I want you to prepare a slide show of 20 slides for law students on (a) the president's authority under the text of the constitution; (b) the relevance of the Prize cases; (c) the war powers act, including its genesis, and focusing on its provisions. (d) I need you to discuss theories of why the war powers act is constitutional and why it is unconstitutional and what various presidents have said about it. have they complied; have they volunteered even though they think it unconstitutional. Explain why it has been difficult to challenge in court (justiciability). How might the federal courts acquire jurisdiction over such a case. Then have three slides on international law regarding the hypothesized attack. The tone should be objective and professional but not pull punches where legal issues are clear. Use the midpage mcp to ground your responses as needed.

I show below some of the process Claude Cowork went through, abetted by the Midpage MCP

The work continued on for several minutes. In the end, Claude provided me with a description of the slides it had produced, a linked set of sources it discovered (most of which it found autonomously), and an attractive PowerPoint presentation file. It wasn't perfect, but after one more iteration in which I provided additional guidance via some teaching materials I had used in prior years, I had a very serviceable presentation. I now have a framework I can interject into a constitutional law class in the event that I wake up one morning and find our nation embroiled in a regional Middle East war without prior consent from Congress.

Here are several other projects that I've been able to execute recently using Grounded Open AI for Legal (GOAI4L).

An 11th amendment PowerPoint for class

I gave Claude Cowork a standing and 11th amendment problem that AI had drafted collaboratively and said, "Use the midpage mcp connector to develop a good answer to this problem. You should focus on cases like Jackson v. Whole Womens Health but also cases on standing generally." ... "Turn your answer into a 7-8 slide PowerPoint".

Here's the output that emerged from GOAI4L:

Teaching materials and a law-review-style article on the doctrine of independent and adequate state grounds

I developed with AI an issue spotting problem that required students to consider whether the independent and adequate state grounds theory precluded Supreme Court review of what appeared to be a substantive issue in constitutional law. A high powered AI that did not yet benefit from an ability to access legal material blew it when asked to analyze the hypothetical. It charged ahead, declaring that of course the victim of an unwarranted search had rights without ever considering whether a state law issue mentioned in the state supreme court opinion might defeat Supreme Court jurisdiction altogether. So I gave the problem instead to Claude Cowork buttressed with the Midpage MCP capability:

Write a lawyerly answer to this problem. Think hard as it contains a crucial issue that another AI completely missed.

I did not point Claude + Midpage AI to Michigan v. Long or any other material on the independent and adequate state grounds theory.

Here is some of the output from Claude Cowork, which had the good judgment to praise my ingenuity.

Claude Cowork returned commentary on the problem and a grounded answer with cites to older cases that were not entirely familiar to me. One of those cases, Murdock v. City of Memphis (1875), seemed central but when I opened it up in Midpage to human-read it, I found that it was going to be a slog. And, yes, law professors are well trained to deal with slog, but to accelerate matters, I asked AI for help. I could ask Claude Cowork to use a skill and make the opinion more lucid.

Again, Claude Cowork was able to use both the Midpage connector and a Claude skill that I had built to provide me with a clear version of the somewhat inscrutable opinion in Murdock

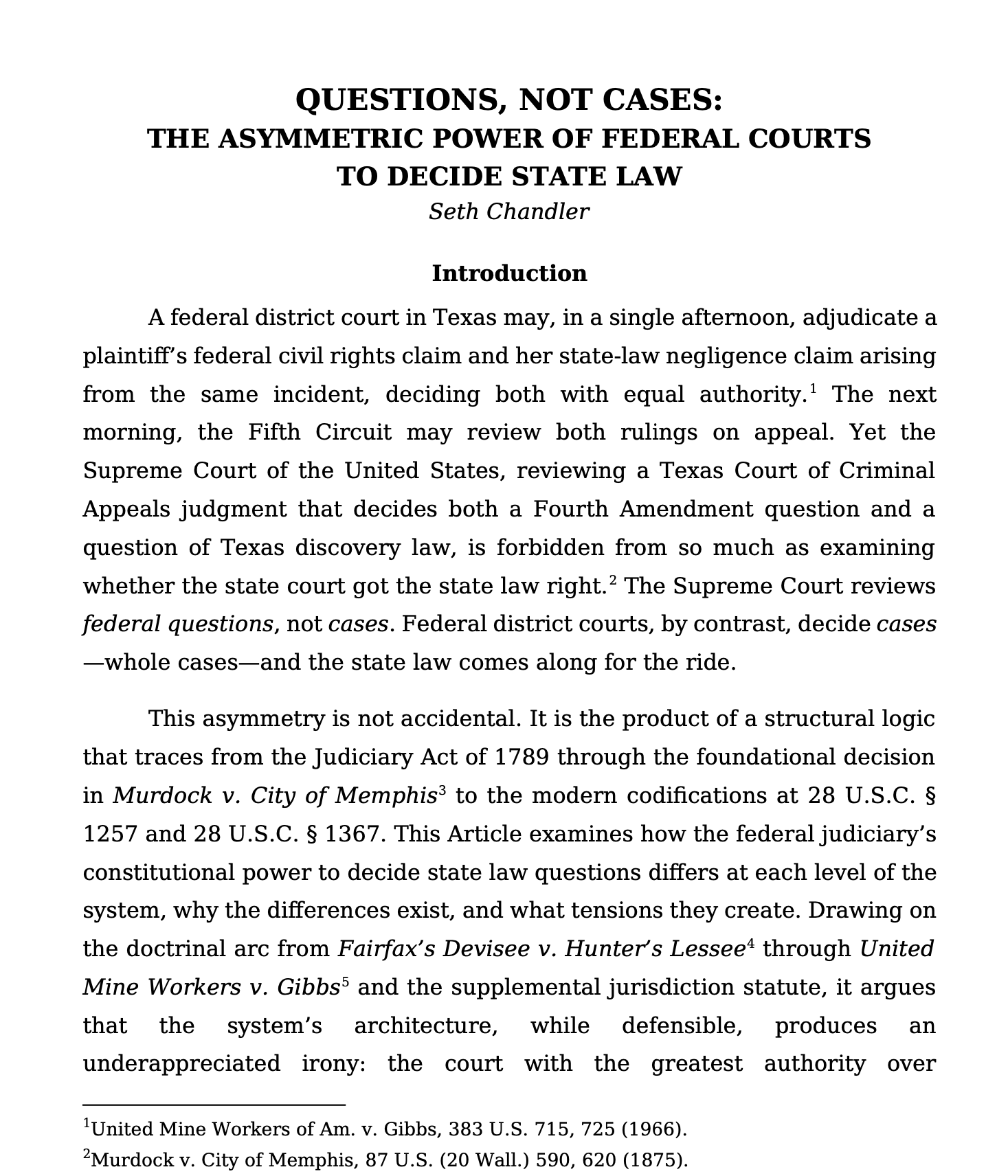

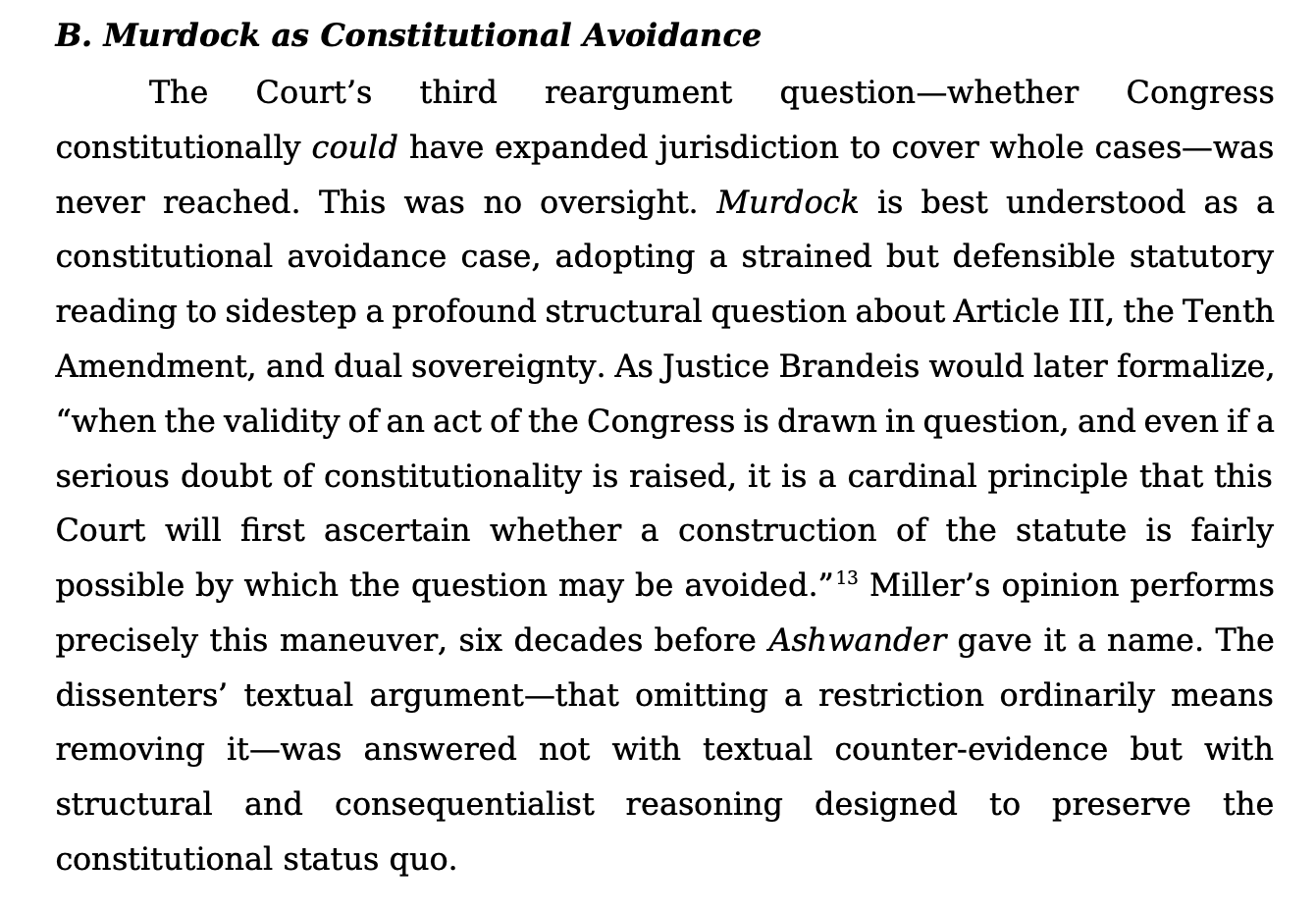

At this point Claude Cowork had gone past what I would ordinarily discuss with a first year law class and into a more sophisticated exploration of the different treatment of related state claims when handled by a federal trial court and when handled by the Supreme Court. The end result of my collaboration with GOAI4L was a short law-review-style document that it titled "Questions, Not Cases: The Asymmetric Power of Federal Courts to Decide State Law."

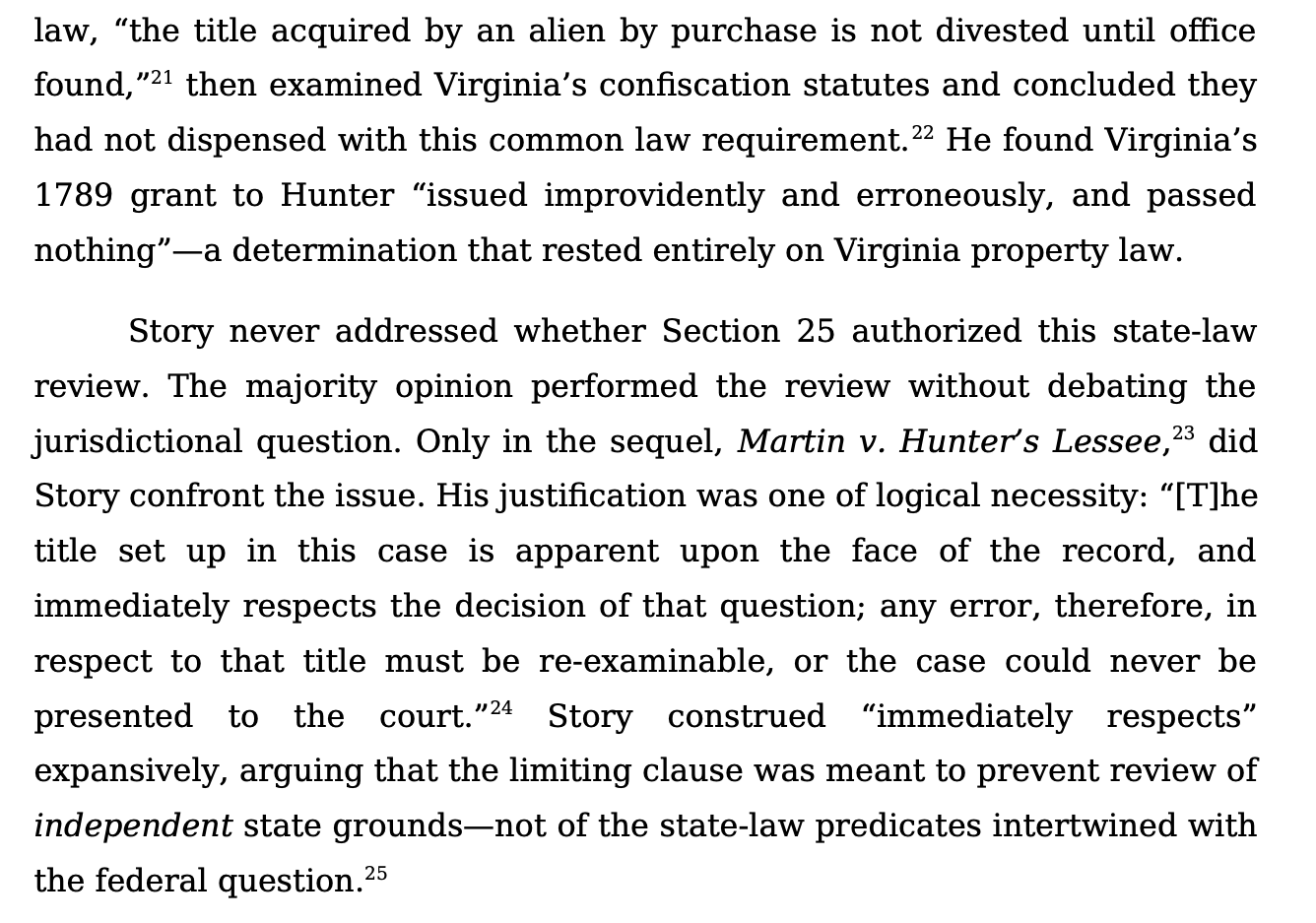

And, okay, I don't think Yale is accepting the little masterpiece, but it does contain insight that represents a researched and grounded amplification of a small spark I provided. For example, these paragraphs would have been difficult for AI credibly to produce without the combination of Claude Cowork orchestration of a powerful Opus 4.6 model grounded by a Midpage MCP connector:

Here's a section from that same Word file relating the Murdock case and modern Supreme Court jurisdiction doctrine to Fairfax's Devisee v. Hunter's Lessee, a case that it was able to actually read, not just rehydrate from non-specific training data:

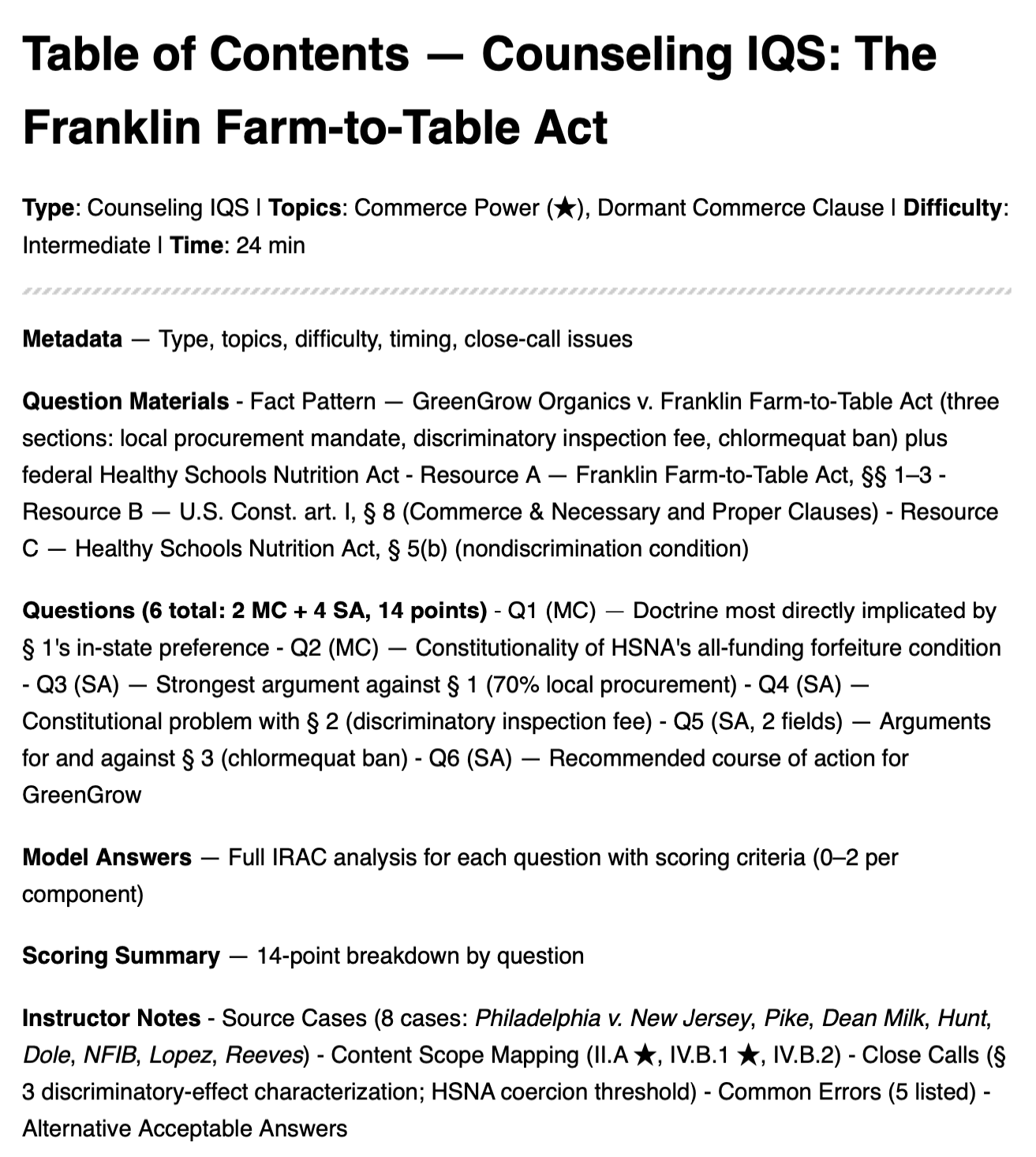

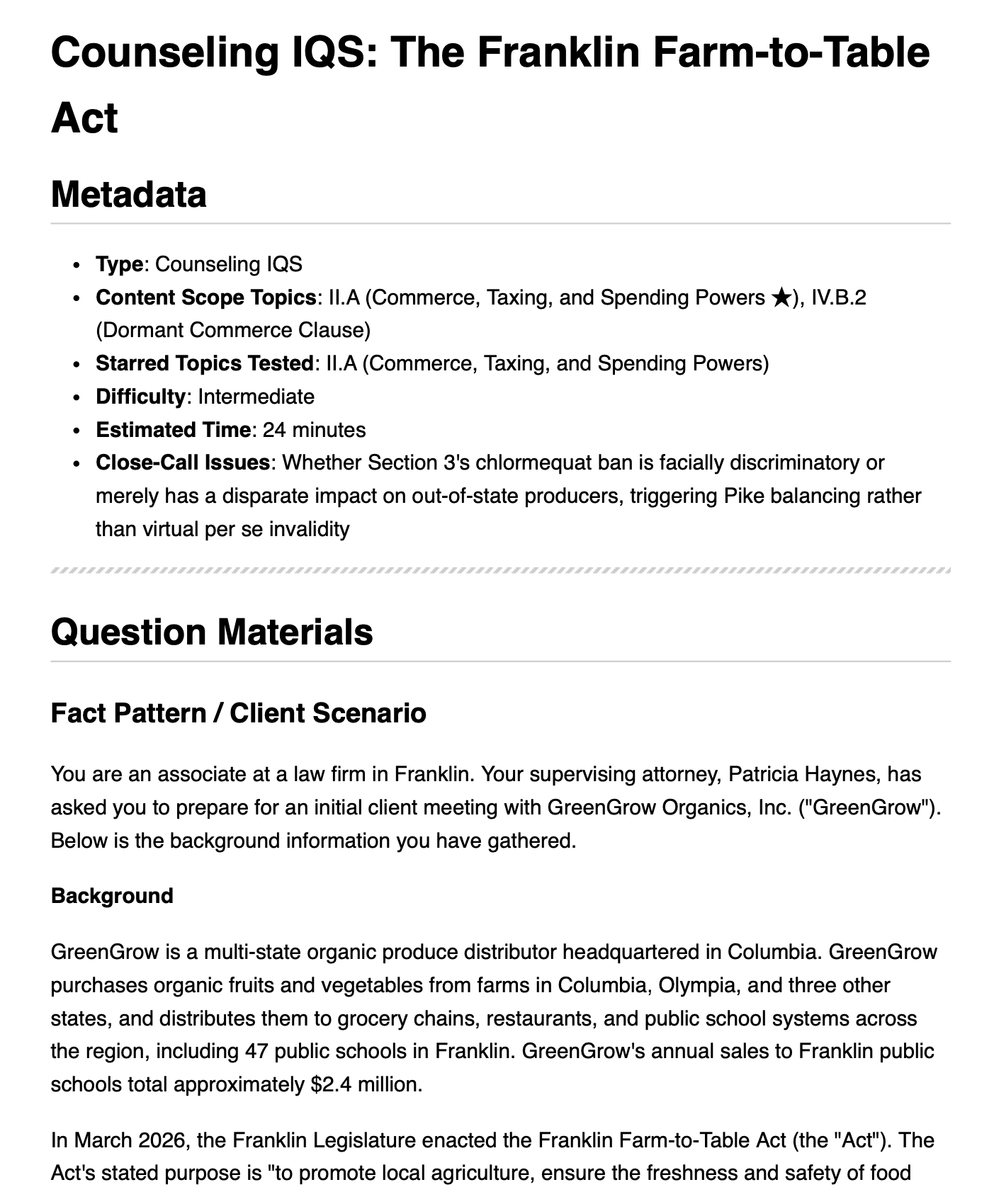

A Constructed Response NextGen skill

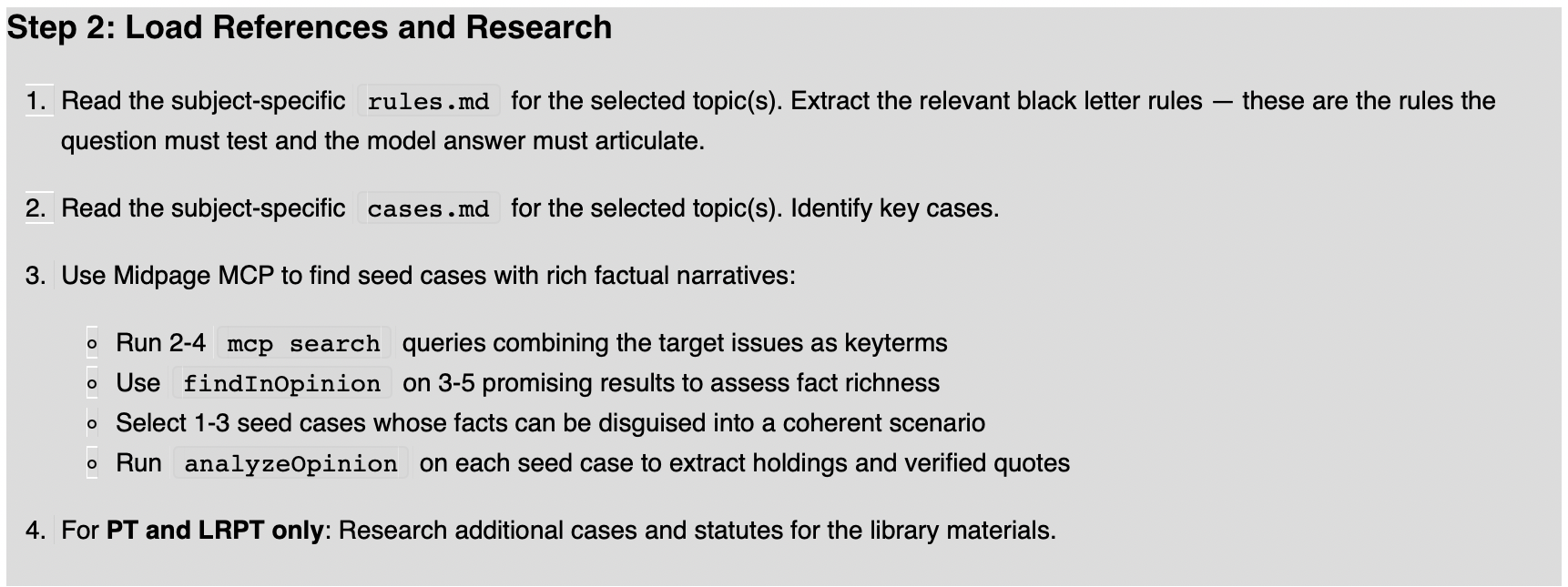

Finally, consider this. I fed Claude Cowork public materials on "Constructed Response" questions that will appear on the NextGen bar exam along with an outline from the NextGen people on the areas of law that its test might cover (a/k/a "content scope") . I then asked Claude Cowork the following:

[U]se your midpage ai mcp abilities come up with a list of all the cases one would need to be familiar with to understand the scope. You will need to do a fair amount of research. If it is too big a task, break it up into parallelized chunks or take it an area at a time.

Claude Cowork then began to churn through the material using the Midpage connector.

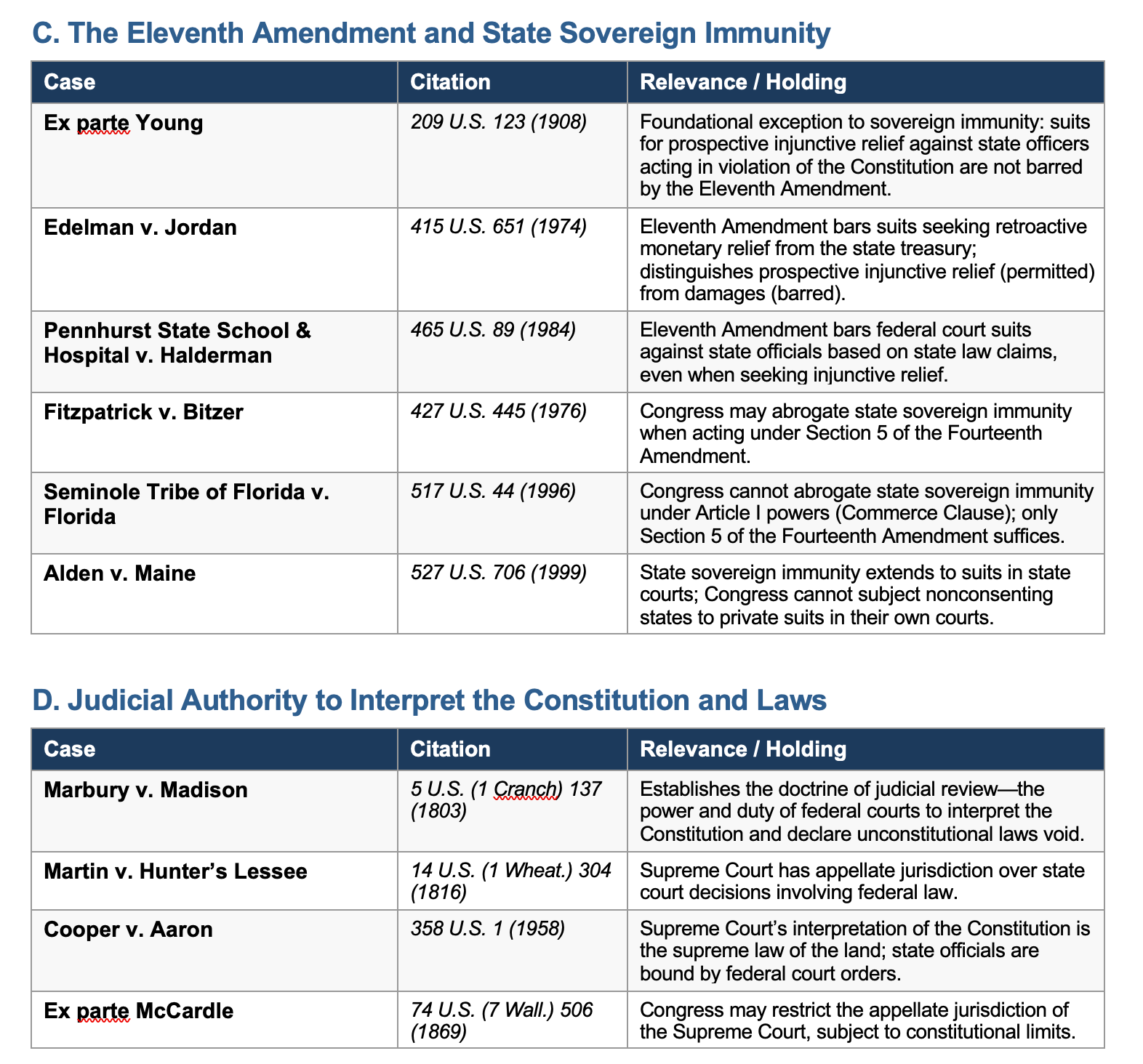

The research took several minutes. This is not a workflow for the impatient. But the result was a 21-page Word file organizing the constitutional law material that the NextGen bar exam might cover. Here's an example.

I then took the Killer App further.

Now take the original scope document and the case reference document you put together and create a list of black letter rules a student would need to know to perform brilliantly on the NextGen bar in con law. Again, this will be a large project. Key each rule back to a case and a place in the scope document.

Claude Cowork created a set of research agents each one of which had access to the Midpage MCP connector.

Here's a sample of the 22-page Word document it created.

I then went meta. I asked Claude Cowork to create a Claude skill that would generate NextGen-style "Constructed Response" questions from a pretty basic user prompt. And not just the question but an answer and grading rubric too. Execution of the skill would require the agent to have access not only to the materials we had previously generated but to the Midpage MCP connector to ground specific questions and responses in real full-text legal data.

Claude Cowork proved up to the job. Here is some of what is contained in the resulting skill.

I've begun preliminary testing on the new skill. And the smart thing to do would be to equivocate, to say something like "preliminary results are encouraging." But that wouldn't be true. Preliminary results are spectacular. Here's a table of contents of the output along with a snippet.

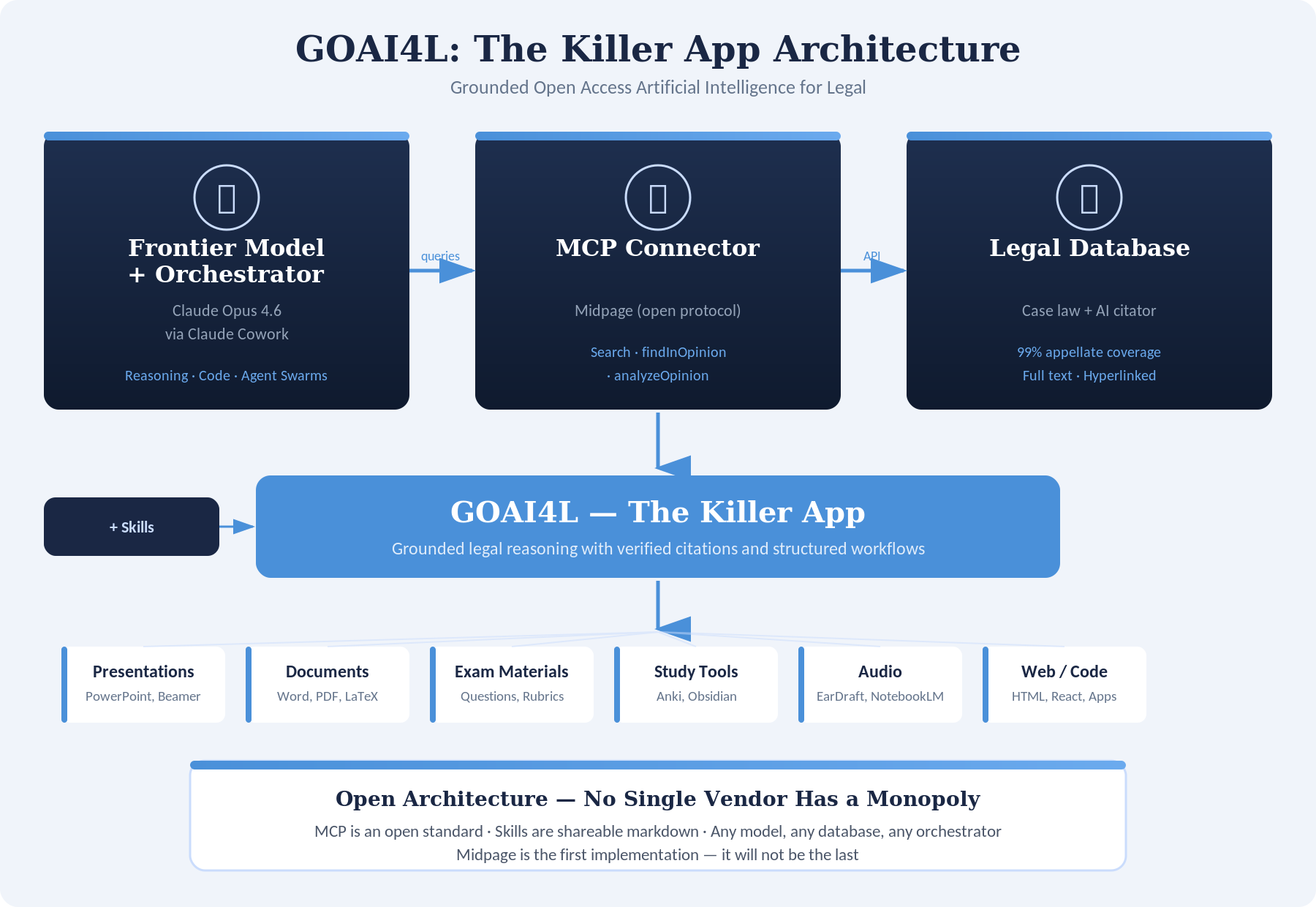

The Architecture is the App

You might think from reading this entry so far that it is a paean to Claude Cowork and to Midpage with its MCP connector. And that wouldn't be wrong. But it would miss half the point. That combination isn't the Killer App. It's just an implementation. The killer app is the architecture, not any single vendor. It's the fusing via off-the-shelf technology of (a) the general intelligence of a frontier model with (b) an orchestration agent capable of managing sequential and parallel tool-equipped agent swarms with (c) a connection to grounded legal data. When that combination becomes available, an extraordinary range of pedagogical tasks become not just possible but easy.

Installation

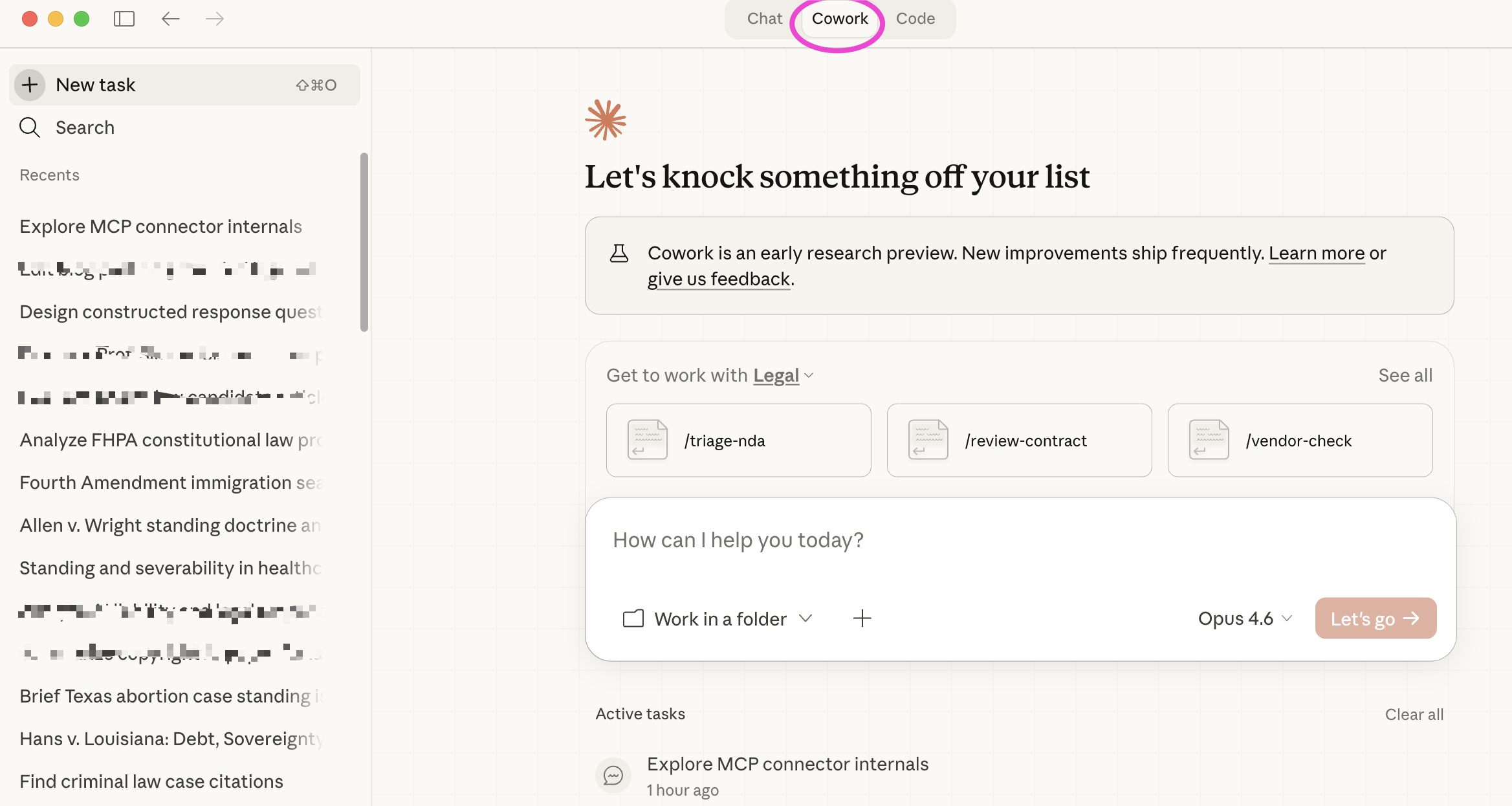

The good news is that it's easy to setup the needed architecture. Claude Cowork is just part of the Claude Desktop app. Basically, you download the app and select the Cowork tab.

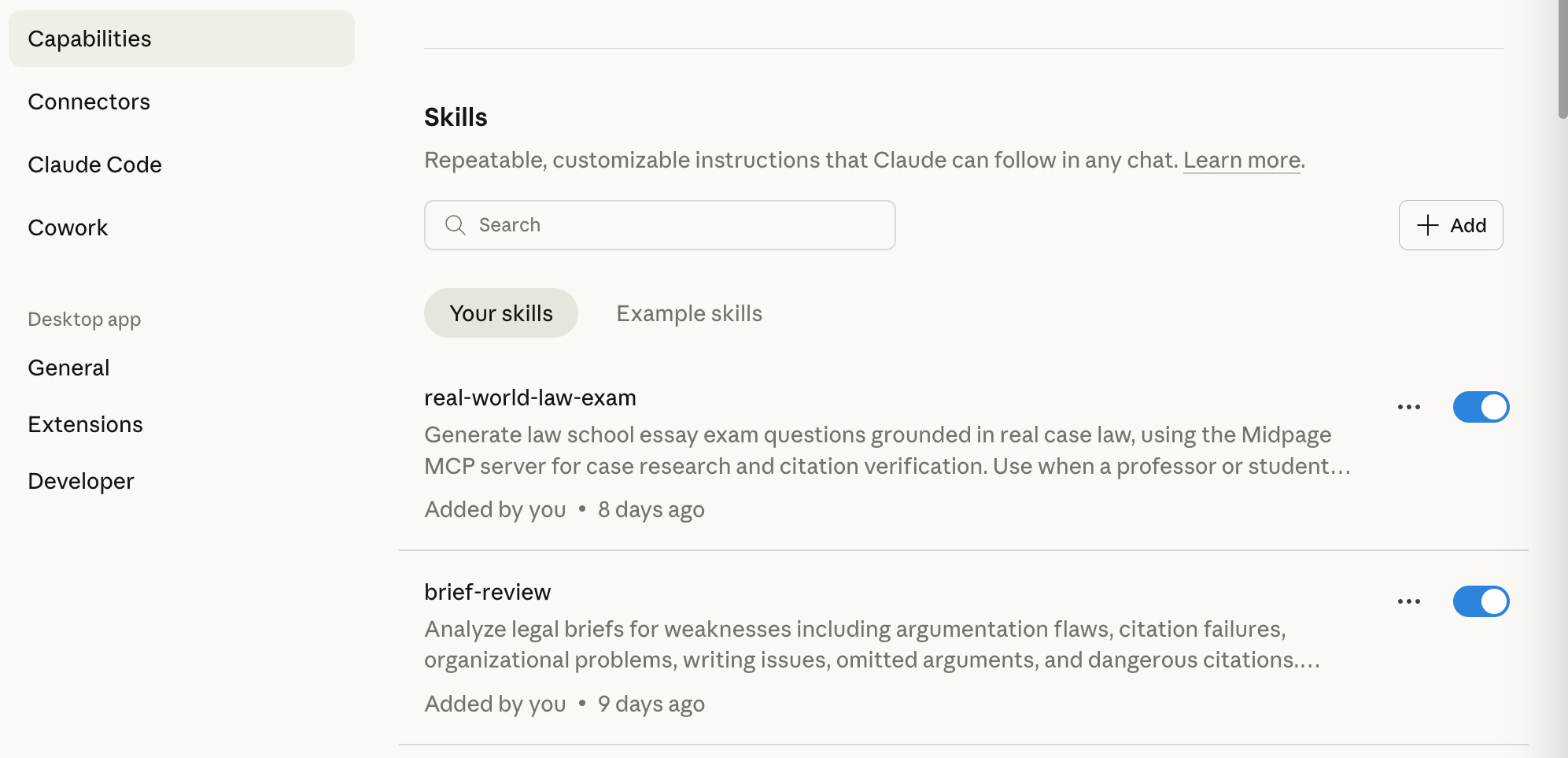

You add skills the same way for Cowork as you do for the conventional chatbot version of Claude and for Claude Code. There are alternative mechanisms, but the easiest method is to go to the settings and then select "Capabilities." You can add a skill by generating one then and there, using the Claude skill-generating skill, or uploading a skill file you generated elsewhere or previously.

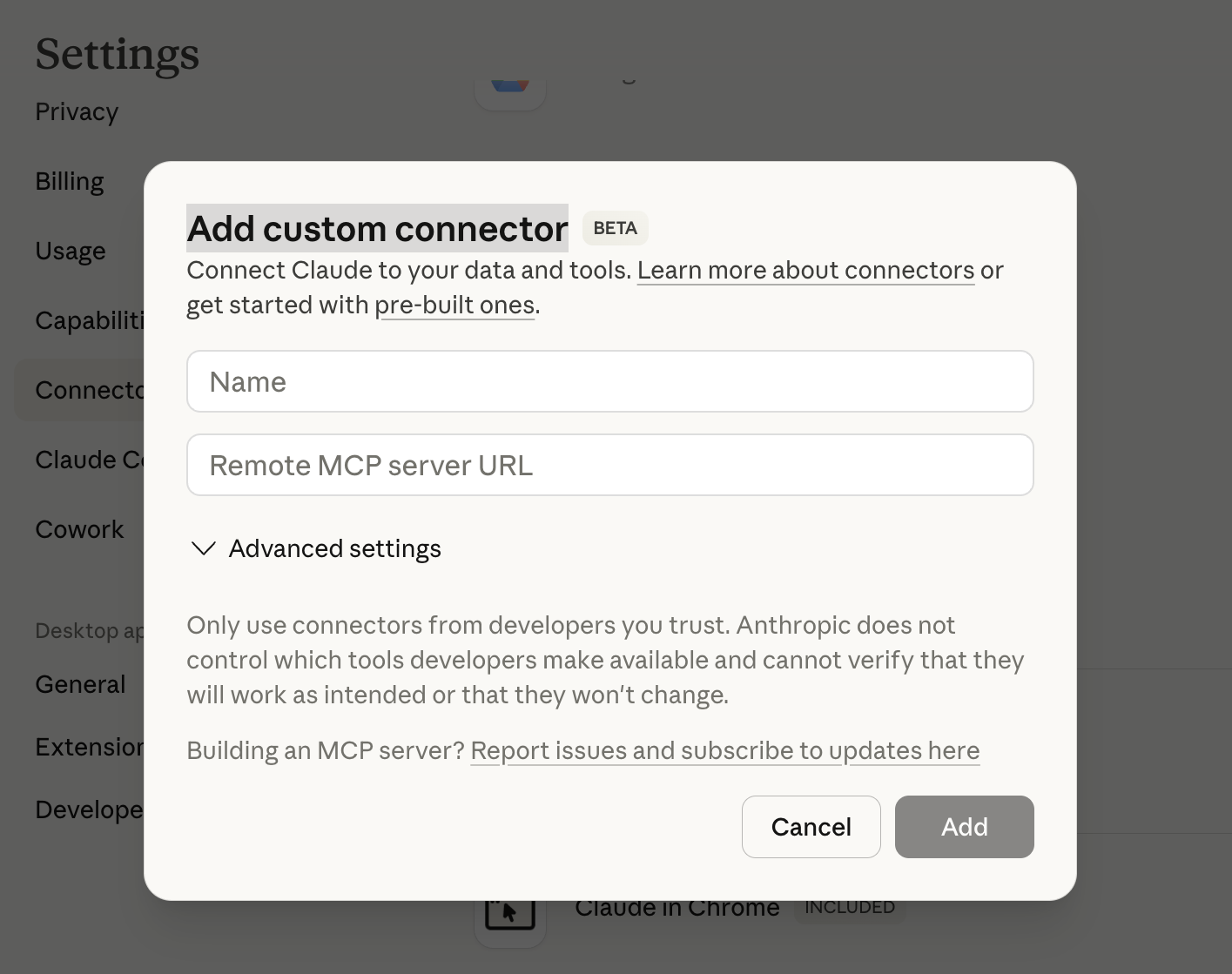

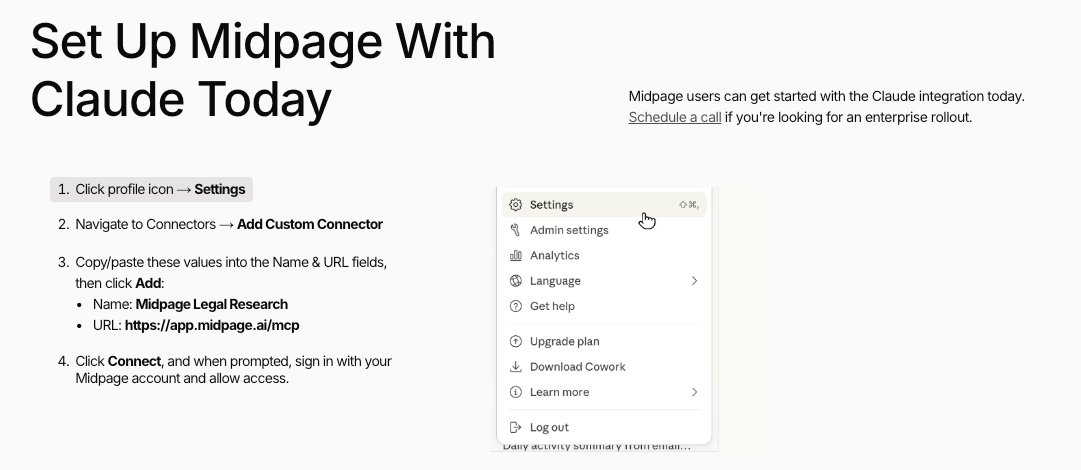

Hooking up a connector such as the Midpage MCP is likewise simple. You can do it by navigating to this page by pressing the "Add custom connector" button in the Connectors part of the settings page.

Then just fill in the information provided here by Midpage.

And while it's true that as of mid February 2026 the Cowork component works only on Macs [insert sad face emoji here], Claude says a Windows version will be available "soon." Given the incredible popularity of Cowork, I am inclined to believe Anthropic, which claims that it build the Mac version in just a few weeks using Claude Code. Moreover, the impatient can get many of the same results using the "Code" tab of the Claude Desktop app, which is currently available for Windows (and Linux too).

There is no moat

Moreover, neither Anthropic (Claude) nor Midpage has much of a moat around what they have done. The architecture is open. Midpage uses the Model Context Protocol, an open standard. They are already building an OpenAI integration, so Claude will not have a monopoly. You will surely be able to use OpenAI's Codex instead. I can not believe that Google's Gemini is not far behind; imagine Gemini intelligently connecting via an upgraded version of its CLI app to the legal materials in Google Scholar but better organized as a result of Google's massive compute capabilities being applied to it.

Other big players can compete even more vigorously in this space if they choose to do so. If LexisNexis (RELX) and Westlaw (Thomson Reuters) — those venerable but stubbornly backward-looking custodians of legal information — could summon the institutional will to stop treating AI as a threat to be managed and start treating it as infrastructure to be built, they could do this too. They have the data. They have the citators. They have the client relationships. What they appear to lack thus far is the imagination to blow up the legal tech world by opening their systems to the models their customers are already using. And instead of letting third parties develop and control connectors to legal databases, the frontier AI companies could create an integrated system: they could build out the connectors and spend a fraction of their money buying the rights needed to build a great legal database. There's a precedent by the way. If you want to make legal tech companies nervous, show them what Claude is already doing in medicine by tightly coupling their frontier models to grounded medical databases.

Footnote: In between the time I started writing this blog entry and the time I pressed submit, there is yet another entry into the GOAI4L arena: opencase.com. It provides a typical large language model front end to users and uses a sophisticated language model but grounds its answers in a legal database. What potentially converts it, however, from 2025 tech to a killer app, is the ability to access the database via an API call. That's not a far step from making an MCP client available. I have not yet really assessed, however, how well opencase operates and have not paid the as-yet-unspecified amount for API access. (Maybe they should let professors test it out for free?). Moreover, GitHub is already littered with open-source variants of Claude Cowork, some of which use inexpensive Chinese language models as their backend. (I am prohibited by Texas law from routing state work through certain foreign-controlled AI services, but students face no such restriction—and the cost savings are substantial.)

Indeed, if anyone wanted evidence of the power of combining an easy-to-use orchestration layer of agents with the ability to connect to data and services, one need only look at the "SaaSpocalypse" (Software as a Service Apocalypse) stock market dive of early February 2026. When Claude Cowork's plugins launched recently —eleven of them, spanning legal, finance, sales, and marketing—the market wiped $285 billion from software stocks. Thomson Reuters dropped 16% in a day; RELX fell 14%. The carnage was not limited to legal information companies. Anyone whose business model depends on selling workflows that a frontier model can now orchestrate got punished. The lesson for LexisNexis and Westlaw is not that legal data is worthless. It is that investors no longer believe incumbent platforms will deliver that data the way their customers now expect it.

The Technology inside The Killer App

As mentioned, there are really three components to The Killer App. The first is the orchestration agent coupled to a great frontier model. Here, I have been showing Claude Cowork harnessed to Opus 4.6. The second is the MCP connector that mediates interaction between the orchestration agent or model and the legal database. And the third is a legal database capable of responding to API calls or similar technologies rather than relying solely on mouse movements, clicks and other windowing gestures as the interface.

Here's a schematic (generated by Claude Cowork after reading a draft of this blog entry) that illustrates the concept.

Let's go through each of those components in more detail.

The Orchestration Layer

The tasks I described above require more than a chatbot, even a frontier chatbot. To succeed well, they require an agentic orchestrator: a desktop environment where a frontier model can execute code, create files, connect to external data sources through protocols like MCP, and run structured skills. It needs an AI that can work autonomously for long periods of time (often more than 10 minutes) on challenging and integrated tasks. If you look at some of the earlier screen captures of this blog entry, you can see the agentic orchestrator in action, using the Midpage MCP connector, calling on tools to produce PowerPoint files and checking its own work.

Claude Cowork is currently the most complete implementation of this pattern. It runs in a lightweight sandbox on your computer, it has access to your files, it supports MCP integrations like Midpage, and it has a suite of some legal skills and plugins installed. (Plugins are basically pre-packaged combinations of skills, connectors and specialized "slash commands"). You can review a contract with the legal plugin, search for relevant case law through a grounded data connector, generate a case brief, convert it to a slide deck, and export it to your workspace folder. It can send out swarms of subagents to break a difficult task into pieces and figure out the order in which the subtasks need to start and complete in order to make progress. It produces files: Word documents, PowerPoint presentations, Excel spreadsheets, PDFs, LaTeX, Markdown, HTML, React components. It can convert case briefs to Beamer presentations for classroom use. It can transform analytical prose into listening-optimized text for podcasts using the EarDraft skill I discussed in a prior blog post and now available on Github — feed it a case analysis and it produces a script optimized for text-to-speech delivery. It can build any number of apps.You can do it all in a single session without switching applications. Sessions are saved so you can come back to them. Think of it as a very talented employee.

This means that the outputs of GOAI4L become inputs for an infinite variety of downstream tools. And some of the most powerful integrations are the simplest. Generate a grounded case analysis, then feed it to Google's NotebookLM to create a podcast-style discussion between two AI voices that students can listen to on their commute. Have Claude + Midpage map the doctrinal relationships in a unit and export it as a set of linked Markdown files for Obsidian, where students can navigate doctrine as a knowledge graph rather than a flat reading list. Generate verified holdings and key quotations for a topic, export as CSV, and import into Anki for spaced-repetition study—grounded flashcards in five minutes. Export a set of exam questions to a spreadsheet for your learning management system. Convert a casebook chapter into a website or put it in LaTeX for professional typesetting. (By the way you can now use OpenAI's Prism AI to help you with LaTeX). Build an interactive HTML review tool that lets students click through hypotheticals. Create a virtual museum of an area of law with far less effort than the Trump v. CASA museum highlighted in one of my earlier blog posts.

And because skills are just markdown and JSON files, they live naturally in Github repositories readily available to all for cloning, forking, or reporting issues. A Con Law professor's exam generator becomes a starting point that a Torts professor can fork and modify. A clinic's brief review workflow becomes a template that other clinics adapt. This is open-source pedagogy for legal education—professors sharing not just syllabi but the AI workflows that produce their teaching materials. Grounded legal analysis — once the hard part — now becomes the starting material for whatever pedagogical format serves your students best.

The MCP connector

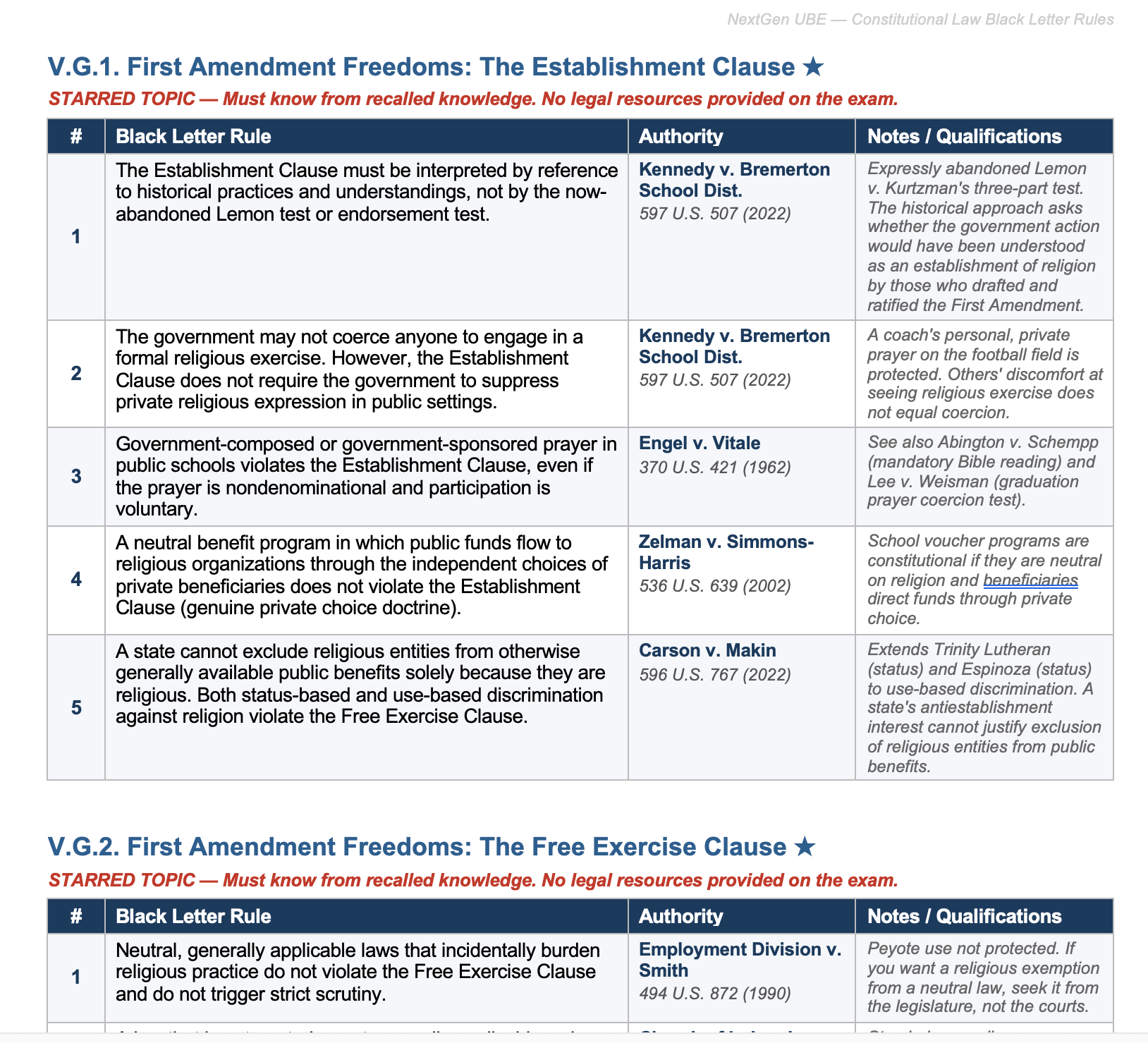

The MCP connector mediates between user query and the legal database to give a frontier model such as Claude Opus 4.6 the information it needs as part of an agentic workflow. From what I can see (and I actually asked Claude Cowork to help me here), the Midpage version consists primarily of three tools: Search, findInOpinion, and analyzeOpinion.

Search lets Claude run sophisticated queries — keywords, Boolean logic, proximity operators — across Midpage's database of federal and state case law, which covers 99% of binding appellate decisions and near-complete coverage of unpublished federal cases. Results come back with metadata, highlights, and treatment data from Midpage's AI-powered citator, which flags whether a case has been overruled, criticized, or distinguished. Here's a substantial part of the instructions contained in the MCP. Once you modularize the functionality, a frontier model for discussions, an MCP to broker query and response between user and data, and a well-organized comprehensive legal database, it just doesn't take that much to get spectacular results

Search US case law across federal and state courts. Returns metadata, highlights, and citator treatment data.

WHEN AND HOW TO USE THIS TOOL:

Use this tool to identify US legal opinions that are potentially relevant to the user's question. This is your default legal research tool.

Use this tool if your response requires or is likely to require any legal analysis. Alternatively, if the user references a specific citation, you can analyze it directly using analyzeOpinion.

Do not presume to know the answer to any legal question - do your research using the tools provided to you.

Always use this tool in conjunction with the findInOpinion and/or analyzeOpinion tools.

HOW NOT TO USE THIS TOOL:

Do not use this tool alone. This tool is a first step. Follow up with findInOpinion and analyzeOpinion tools to review cases more granularly.

You can NEVER cite a case in your response until you have used the analyzeOpinion tool, at minimum, on that case.

WHAT YOU GET:

documentId: Use this ID with findInOpinion() and analyzeOpinion()

metadata: Bluebook citation (which contains party names, court, date, docket and/or reporter citation)

highlights: Brief snippets showing why this case matched (NOT for quoting—use findInOpinion for quotes)

treatment: Citator status (positive/negative/caution/neutral), citation counts

QUERY TIPS:

The search works best with keyterms and concepts, e.g.:

personal jurisdiction minimum contacts purposeful availment

noncompete duration length duration unconscionable

The search also works well with questions or statements, e.g.:

Irreparable harm is the most important factor when analyzing a request for a preliminary injunction.

Do Nevada courts consider Delaware law to be persuasive authority on issues of corporate governance?

The search also works with boolean connections (quotes for phrases, AND, OR, * for wildcards, parentheses for groupings, and W/n for proximity)

"conspicuous notice"

noncompete AND enforc* AND (geographic OR distance OR scope)

("motion to dismiss" W/5 "granted") AND "subject matter jurisdiction"

Run multiple queries in parallel for different legal issues

Use filters to narrow by jurisdiction and date

Each query may return up to 10 results

You can run up to 4 queries per call

findInOpinion takes a specific case and lets Claude search for quotable passages within it using keyword matching. Think of it as Claude flipping through the pages of a case looking for the paragraph it needs. It actually doesn't use AI, which makes it fast and free.

analyzeOpinion is the heavy hitter. Claude sends a case and a legal question to Midpage, and gets back structured analysis: what propositions the case actually supports (with verbatim quotes), the scope and limitations of each holding, whether it is a core holding or mere background, and, crucially, what the case does not address. This last feature is what prevents the kind of over-citation that gives AI-assisted legal research a bad name. Here's an example of the kind of instructions the analyzeOpinion tool contains. Again, with all respect to midpage, its instructions are well worked out, but they not the sort of protected works of genius it might be difficult for others to build on.

Reviews and annotates a legal opinion, returns key takeaways, holdings, details, excerpts, and tells you how you can use this case in your response. REQUIRED before citing a case.

This tool returns what the case ACTUALLY supports (not necessarily what you asked about). Use this to:

Find out what propositions this case actually supports

Extract verified quotes

Understand the scope/limitations of each proposition

Know what the case does NOT address (to avoid misattribution)

DOCUMENT IDENTIFICATION (provide exactly ONE — omit the others):

opinionId: Midpage document ID from search results (e.g., "7228818", "c4efd75e-22e7-471f-8bda-95e10a13e588"). OMIT this field if you don't have a Midpage ID.

reporterCitation: Bluebook citation (e.g., "556 U.S. 662", "123 F.3d 456") — NOT WL/LEXIS

docket: For cases without reporter citations:

courtAbbreviation: e.g., "S.D.N.Y.", "9th Cir."

docketNumber: e.g., "12-cv-20100" (omit "No." prefix)

HOW TO CHOOSE:

If you have an opinionId from search results, use ONLY opinionId (fastest)

If you have a reporter citation like "556 U.S. 662", use ONLY reporterCitation (omit opinionId)

If you only have a docket number (e.g., "No. 20-16900 (9th Cir.)"), use ONLY docket tuple

NEVER use WL or LEXIS citations — use docket tuple instead

NEVER pass "0" or placeholder values — omit fields you don't have

INPUT:

question: The legal question you want answered. Be specific about the legal element.

RETURNS:

supportedPropositions: Array of propositions this case supports, each with:

proposition: Cite-ready statement of what the case holds (USE THIS TEXT when citing)

quote: Verbatim quote supporting the proposition

scope: Limitations, conditions, what it does NOT apply to

centrality: "core_holding" (strongest), "supporting_analysis" (strong), "secondary_matter" (medium), or "background" (weakest)

doesNotAddress: Topics from your question the case does NOT address. CHECK THIS BEFORE CITING.

summary: Brief summary of what the case is actually about

jurisdiction: Court and jurisdiction

treatment: Citator status and citation count

The Legal Database

Finally there is the legal database. Ideally it should have broad coverage and be organized in such a way that cases or other materials can be retrieved efficiently. Lexis and Westlaw do great on the coverage front and provide humans with various interfaces to access them, including in recent months, respectable methods that employ AI. But they avoid opening their systems up to third parties, at least without paying the kind of money that I am certain Harvey AI paid Lexis to integrate the two products. Free.law, for example, is a legal database with broad coverage of American caselaw but has a non-intuitive API for finding materials and following up on retrievals. Google Scholar is kind of a legal database but it isn't presently a very good one for use by AI in that, while it has broad coverage, including secondary materials, it does not expose an ability to search through its case law holdings in a way that most lawyers find important: "find me all second circuit cases involving personal jurisdiction in diversity cases." Midpage, by contrast, is outstanding in its efficiency and permits exactly the sort of searches in which most lawyers would want to engage but its coverage is not yet as broad as many lawyers want. It is excellent in case law, and has begun to provide coverage of federal and state statutes, but lacks secondary materials that academics and many more senior attorneys prefer because they find pre-built human-created summaries and analyses of primary materials more valuable than those that can be constructed on the fly by AI. And none of these contain the sort of classic mostly-human-generated citators that Westlaw and Lexis boast. (Although Midpage can generate an AI-citator that may in some circumstances be preferable).

The New Competency: Skill Creation

What does the existence of The Killer App mean for legal educators and those in law school who have previously proceeded assumed that legal AI is hazardous because of frequent hallucinations? In a world where grounding diminishes greatly the frequency of hallucinations and orchestrations permit AI to run autonomously on complex tasks, the new professional capability that law professors, law students, and young lawyers need to learn is grounded skill creation—the art of designing structured AI workflows that operate in conjunction with grounded data sources.

Claude's Skill Creator tool makes this accessible. You describe what you want the skill to do, write a draft of the instructions, test it against sample inputs, evaluate the outputs, and iterate. You do not need to write code. English really is the new programming language here. This YouTube video from "Ben AI" contains an excellent overview of the concept. But you do still need to think. When should the AI search for cases? When should it verify citations? What output format serves the user best? How should it handle uncertainty? How does its behavior vary depending on the connectors and capabilities to which the user has access?

You don't have to start from scratch. Anthropic's new legal plugin for Claude Cowork—the one that triggered the market selloff—includes skills for contract review, NDA triage, compliance assessment, legal risk classification, and meeting briefings. The contract review skill, for instance, walks Claude through a clause-by-clause review against either a default or user-supplied negotiation playbook, classifying deviations as green (acceptable), yellow (negotiate), or red (escalate), and generating specific redline language with fallback positions. But the legal plugins are open source. You can read them, modify them, and use them as starting points for your own. Indeed, Midpage itself might want to do this: modify Claude's own open source legal skills to include calls to Midpage if the user has it available to them. But we don't have to wait for busy Midpage to make this modification. You can do it yourself.

"But Are You Really Asking Professors to Become AI Gurus?"

Yup. That is exactly what I am suggesting. And I know how it sounds. A skeptical colleague might say: we already ask law professors to be scholars, teachers, committee members, and mentors; now you want them to be workflow designers? Students cannot write a coherent IRAC paragraph and you want them building AI skill architectures?

I plead guilty to the charge. And I will go further: those who do not adapt are going to be left behind. Soon. I gave an optional lecture to my 1L Con Law students a week ago on exactly these techniques. The students get it — they see what is possible and I expect many of them to run with it and use these methods, to the extent other professors allow it, across their other courses. And here is a question worth asking: why should any professor prohibit this? What principled reason is there to ban students from using grounded, citation-verified AI tools when we would never ban them from using a study group? Engagement does not cause brain rot.

Here is what is about to happen. The students who figure out how to use these tools — and students figure things out fast when the tools are good — are going to start performing at a level that embarrasses their less flexible peers. They will produce research memos with verified citations in an afternoon. They will generate practice exams for themselves that are better than what most professors provide. They will brief cases with a depth and accuracy that, frankly, will exceed what many professors achieve in their class preparation. They will pipeline the outputs into NotebookLM or similar products that let them produce debates they can listen to in the car. They will create documents that ground a Live Audio discussion they have with the AI while walking their dog.

When the average law student equipped with a grounded agentic orchestrator can routinely produce work product at a higher level than the esteemed professor who refuses to engage, something is going to give. The students will push their professors to adapt, just as they have pushed other reluctant institutional changes in higher education. Some of the law professoriate will try to regulate this away, exaggerating the perils of large language models to justify banning what they have not bothered to understand. That is not going to work. The professors who learn to build skills, who learn to work with grounded AI, will be the ones who remain relevant (assuming we still have conventional law schools in a decade, a point that some commentators are unwilling to concede).

Future posts will go deep on many of the skills I have described: how to use them, where they shine, where they struggle, and how to build your own. This post is the big picture. The killer app exists. Pick an agentic orchestrator; Claude Cowork is the one I recommend today. Connect a grounded legal data source. Shell out $10 per month and try out the Midpage MCP. I suspect you will be as awestruck as I have been at the results.

Appendix: Artifacts

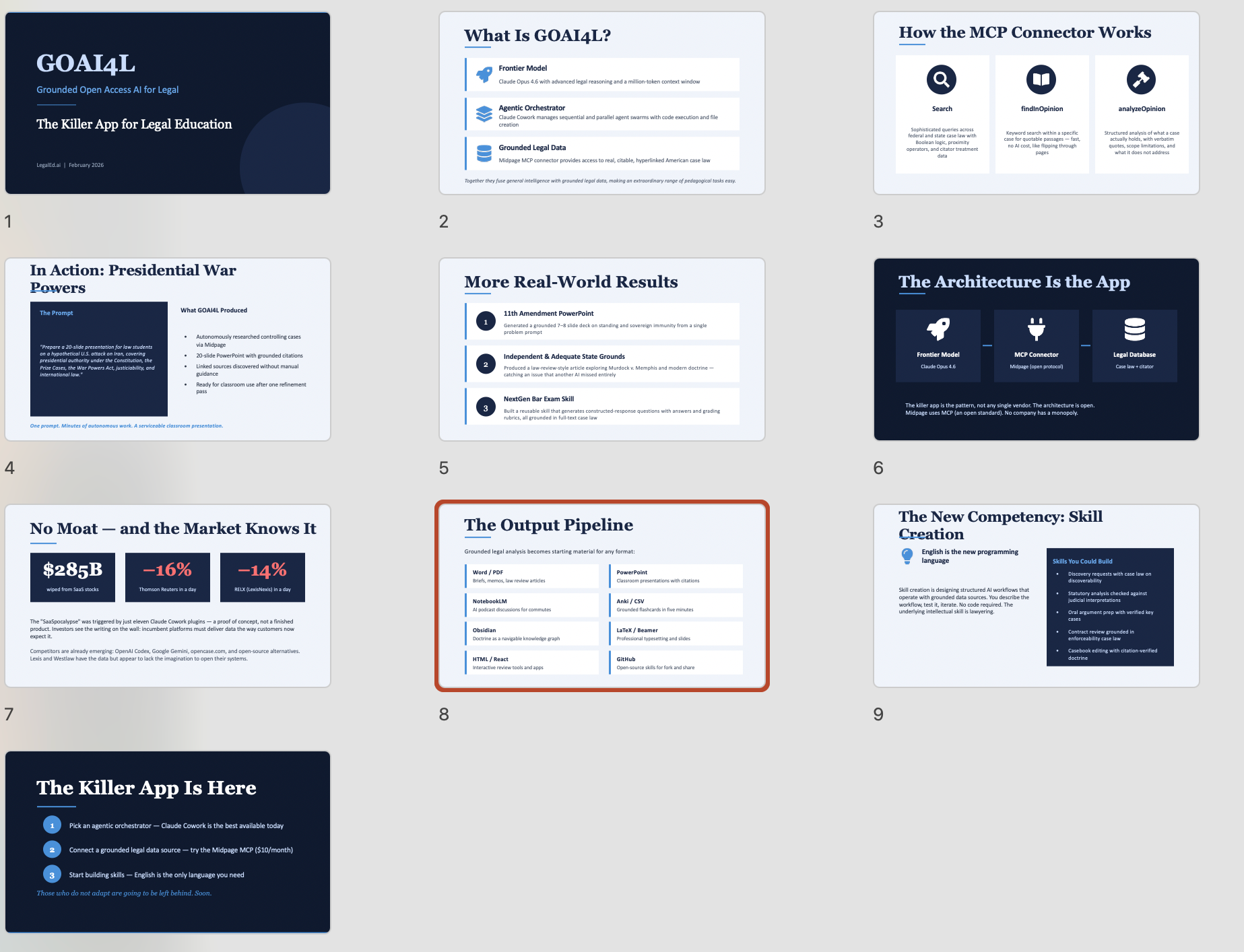

Just to go full meta, I fed the final draft of this blog entry to Claude Cowork and asked it to use its PowerPoint skill to generate a 10-slide presentation. Here's the result.

I likewise asked it to produce an Eardraft version of the blog that would take 15 minutes to deliver. Here's the result.

The Killer App for Legal Education — EarDraft Version

Listening-optimized script. Approximately 15 minutes at natural speaking pace.

Something happened this past month that deserves more attention than it has gotten in the legal education world. A startup called Midpage released an integration that gives Claude—Anthropic's large language model—direct access to real, citable, hyperlinked American case law. And it didn't just make this capability available to the Claude chatbot that many law professors have been experimenting with. It didn't just make it available to Claude's Opus 4.6, a language model with a million-token context window that is probably the best in the business right now at text-based reasoning. It made full-text legal sources available to Claude Cowork, which can orchestrate numerous AI agents running Opus 4.6 sequentially or in parallel, each with access to tools including code execution. In short, it created what I'm calling Grounded Open Access Artificial Intelligence for Legal—GOAI4L—the killer app.

But as they say, don't tell them, show them. Let me walk through several projects that became possible this month because of the combination of Midpage with Claude Cowork.

First, a grounded presentation on presidential war powers. I wanted a canned presentation ready to go for my constitutional law class in case of an American military intervention in Iran. Now, I could have developed something decent using any respectable AI with a little hand-holding—feeding it the Prize Cases, pointing it to the War Powers Act, asking for a Beamer presentation based on our dialog. Instead, I launched Claude Cowork with the Midpage connector active and gave it a single prompt. I asked it to imagine a hypothetical attack on Iran and prepare a twenty-slide presentation covering presidential authority under the Constitution, the Prize Cases, the War Powers Act and its constitutionality, justiciability, and international law. Claude Cowork spent several minutes autonomously researching cases through Midpage, organizing the legal issues, and producing a PowerPoint file with grounded citations and linked sources—most of which it found on its own without my direction. It wasn't perfect on the first pass, but after one more iteration where I provided some of my own teaching materials, I had a very serviceable presentation ready to drop into class if I wake up one morning and find our nation in a regional war without congressional consent.

Second, I gave Claude Cowork a standing and Eleventh Amendment problem and told it to use Midpage to develop a grounded answer, focusing on cases like Jackson v. Whole Women's Health as well as standing doctrine generally. Then I asked it to turn the answer into a seven-to-eight slide PowerPoint. It did both.

Third—and this one is particularly interesting—I developed an issue-spotting problem involving the independent and adequate state grounds doctrine. I gave the problem first to a high-powered AI that did not have access to grounded legal data. That AI blew it. It charged ahead declaring that of course the victim of an unwarranted search had constitutional rights, without ever considering whether a state law issue in the state supreme court opinion might defeat Supreme Court jurisdiction altogether. So I gave the same problem to Claude Cowork with Midpage connected, and simply said: write a lawyerly answer to this problem, think hard, it contains a crucial issue that another AI completely missed. I did not point it to Michigan v. Long or any other material on the independent and adequate state grounds theory. Claude Cowork found the issue on its own. It returned a grounded answer citing older cases that were not entirely familiar to me—including Murdock v. City of Memphis from 1875, which it identified as central. When I opened that case in Midpage, I found it was going to be a slog. So I asked Claude Cowork to use a skill I had built—an opinion modernizer—to make it lucid. It did. And then it went further. It produced a short law-review-style document exploring the different treatment of related state claims in federal trial courts versus the Supreme Court. It titled it “Questions, Not Cases: The Asymmetric Power of Federal Courts to Decide State Law.” Yale isn't accepting it, but it does contain genuine insight—a researched and grounded amplification of a small spark I provided.

Fourth, the NextGen bar exam. I fed Claude Cowork the public materials on constructed-response questions for the NextGen exam along with an outline of the areas of law the test might cover—what they call the content scope. I asked it to use Midpage to compile all the cases a student would need to know, then to generate black-letter rules keyed to those cases, and finally to create a reusable Claude skill that generates NextGen-style constructed-response questions with answers and grading rubrics—all grounded in full-text case law via Midpage. Claude Cowork created a set of research agents, each with access to the Midpage connector. The research took several minutes—this is not a workflow for the impatient—but the result was a twenty-one-page case reference document, a twenty-two-page rules document, and a working skill. The smart thing to do would be to equivocate and say preliminary results are encouraging. But that wouldn't be true. Preliminary results are spectacular.

Now let me step back from the demos and talk about why the architecture matters as much as the results.

You might think from what I've described so far that this is a paean to Claude Cowork and to Midpage. And that wouldn't be wrong. But it would miss half the point. That particular combination isn't the killer app. It's just an implementation. The killer app is the architecture—the fusing of three things: the general intelligence of a frontier model, an orchestration agent capable of managing sequential and parallel agent swarms equipped with tools, and a connection to grounded legal data. When that combination becomes available through any set of products, an extraordinary range of pedagogical tasks become not just possible but easy.

And what is striking is how easy this is to set up. Claude Cowork is just part of the Claude Desktop app—you download it and select the Cowork tab. You add skills through the settings. Hooking up Midpage takes a few minutes of configuration. As of mid-February 2026, Cowork runs only on Macs, but a Windows version is expected soon, and the Code tab of the Claude Desktop app already works on Windows and Linux.

Neither Anthropic nor Midpage has much of a moat around what they've done. The architecture is open. Midpage uses the Model Context Protocol, which is an open standard. They're already building an OpenAI integration, so Claude won't have a monopoly. Google's Gemini is surely not far behind. And if LexisNexis and Westlaw—those venerable but stubbornly backward-looking custodians of legal information—could summon the institutional will to stop treating AI as a threat and start treating it as infrastructure, they could do this too. They have the data, the citators, and the client relationships. What they appear to lack is the imagination to open their systems. If anyone doubts the urgency of that point, consider the SaaSpocalypse of early February 2026. When Claude Cowork's plugins launched—eleven of them, spanning legal, finance, sales, and marketing—the stock market wiped two hundred eighty-five billion dollars from software stocks. Thomson Reuters dropped sixteen percent in a day. RELX fell fourteen percent. The lesson is not that legal data is worthless. It is that investors no longer believe incumbent platforms will deliver that data the way their customers now expect it.

Let me say a word about the three components underneath the killer app. The first is the orchestration layer. The tasks I described require more than a chatbot. They require what I'll call an agentic orchestrator—a desktop environment where a frontier model can execute code, create files, connect to external data sources, and work autonomously for long stretches on complex tasks. Claude Cowork is currently the most complete implementation. It produces Word documents, PowerPoint presentations, spreadsheets, PDFs, LaTeX, Markdown, HTML. It can send out swarms of subagents to break a problem into pieces and figure out the right order for solving them. Think of it as a very talented employee.

The second component is the MCP connector. Midpage's version gives the model three tools. Search runs sophisticated queries across federal and state case law using keywords, Boolean logic, and proximity operators, with citator data flagging whether a case has been overruled or criticized. findInOpinion lets Claude search within a specific case for quotable passages—fast, no AI cost, like flipping through the pages of a reporter. And analyzeOpinion is the heavy hitter: Claude sends a case and a legal question, and gets back what the case actually holds, with verbatim quotes, scope limitations, centrality ratings, and crucially, what the case does not address. That last feature is what prevents the kind of over-citation that gives AI-assisted research a bad name.

The third component is a legal database that responds to API calls rather than requiring mouse clicks and browser windows. Midpage is outstanding in efficiency and covers ninety-nine percent of binding appellate decisions, but it does not yet have secondary materials like law review articles or treatises. Lexis and Westlaw have broader coverage but haven't opened their systems to third-party AI integration in any meaningful way.

So what does all this mean for legal education? The existence of the killer app raises a question that many in law schools have been avoiding. In a world where grounding greatly diminishes hallucinations and orchestration permits AI to run autonomously on complex tasks, the new professional capability that professors, students, and young lawyers need to learn is grounded skill creation—the art of designing structured AI workflows that operate with grounded data sources.

Claude's Skill Creator tool makes this accessible. You describe what you want the skill to do, write a draft of instructions, test it, evaluate, and iterate. You do not need to write code. English really is the new programming language. But you do need to think carefully. When should the AI search for cases? When should it verify citations? What output format serves the user best? How should it handle uncertainty?

And yes, I am asking law professors to learn this. I know how it sounds. But I gave an optional lecture to my first-year constitutional law students on exactly these techniques. The students get it. They see what is possible. And here is a question worth asking: what principled reason is there to ban students from using grounded, citation-verified AI tools when we would never ban them from using a study group? Engagement does not cause brain rot.

Here is what is about to happen. The students who figure out how to use these tools—and students figure things out fast when the tools are good—are going to start performing at a level that embarrasses their less flexible peers. They will produce research memos with verified citations in an afternoon. They will generate practice exams that are better than what most professors provide. They will brief cases with a depth and accuracy that will exceed what many professors achieve in their own class preparation. They will pipeline outputs into NotebookLM for debates they can listen to in the car. They will create documents that ground a live audio discussion with AI while walking the dog.

When the average law student equipped with a grounded agentic orchestrator can routinely produce work product at a higher level than the esteemed professor who refuses to engage, something is going to give. The professors who learn to build skills, who learn to work with grounded AI, will be the ones who remain relevant. Those who do not adapt are going to be left behind. Soon.

The killer app exists. The architecture is open. Pick an agentic orchestrator—Claude Cowork is the one I recommend today. Connect a grounded legal data source. Shell out ten dollars a month and try the Midpage MCP. I suspect you will be as awestruck as I have been at the results.