Improve Law Exam Essays with this new AI-Powered App

A free web app available exclusively to legaled.ai subscribers uses AI to provide swift feedback and scores to help law students develop their exam essay responses.

Most law schools continue to rely on essay exams to evaluate students. So too do state bars. While mastery of the subject matter partly determines how student work is evaluated, so too do skills of organization and clarity in writing essay responses. But students often receive inadequate opportunities to practice responding to open-ended essay questions. It is simply too time-consuming for professors to examine essay responses nor is the generosity doing to requires generally rewarded at promotion time or throughout one's career.

Using Standard Raw Prompting to Receive Essay Feedback

This is where AI can help. What an AI generally needs is the question, the student answer and one or more of a model answer and a scoring rubric. You can then just toss all of that with a little bit of organization into an AI through its standard web interface request feedback. Here's what the structure of a sample query might look like for an ultra-basic sample essay exam.

<question>Discuss the significance of Marbury v. Madison (1803) in establishing judicial review. (50 words or less)</question>

<student>Marbury v. Madison is important because it gave courts power to check laws. It helps keep government fair. (20 words)</student>

<model>Marbury v. Madison (1803) established judicial review, empowering courts to nullify unconstitutional laws, ensuring checks and balances. (19 words)</model>

<rubric>Award 5 points for mentioning judicial review. 5 points for addressing checks and balances. (15 words)</rubric>%%% Give me a score from 0 to 100 on the student response based on the model answer and the scoring rubric

And this should technically work. If the professor has not provided a model or a scoring rubric, no big deal. Before running the query above, you can ask AI to generate both a model answer and rubric and then reference or plug them into the query above. Moreover, the AI is likely to give the student good feedback, particularly if the student sends the query to one of the good reasoning models available today from OpenAI (ChatGPT), Google (Gemini), X (Grok), Anthropic (Claude) and others. It won't be exactly the same as the professor. The AI may have different blind spots than the professor or a student tutor does. But in my experience, at least in the well-plowed areas likely to be covered by basic law school courses, it does a quite respectable job. It might not get every nuance, but it is more objective and reliable than most realistic alternatives. So, really there is no need to use the app I am about to recommend. But ...

This raw prompt method has several problems. First, the student can't avoid seeing the model answer and scoring rubric. It's hard to unsee them. Second, this method can require cutting and pasting from word processors to get the student answer into the prompt. If you have to do that once, it's not a big deal but when you keep iterating your answer, repeated cutting and pasting can be a first world nuisance. And there's the issue of explaining to the AI that it should keep the rubric and model the same but now evaluate a tweaked answer. Again, quite doable, just another layer of complexity.

The Legal Essay Grader Web App

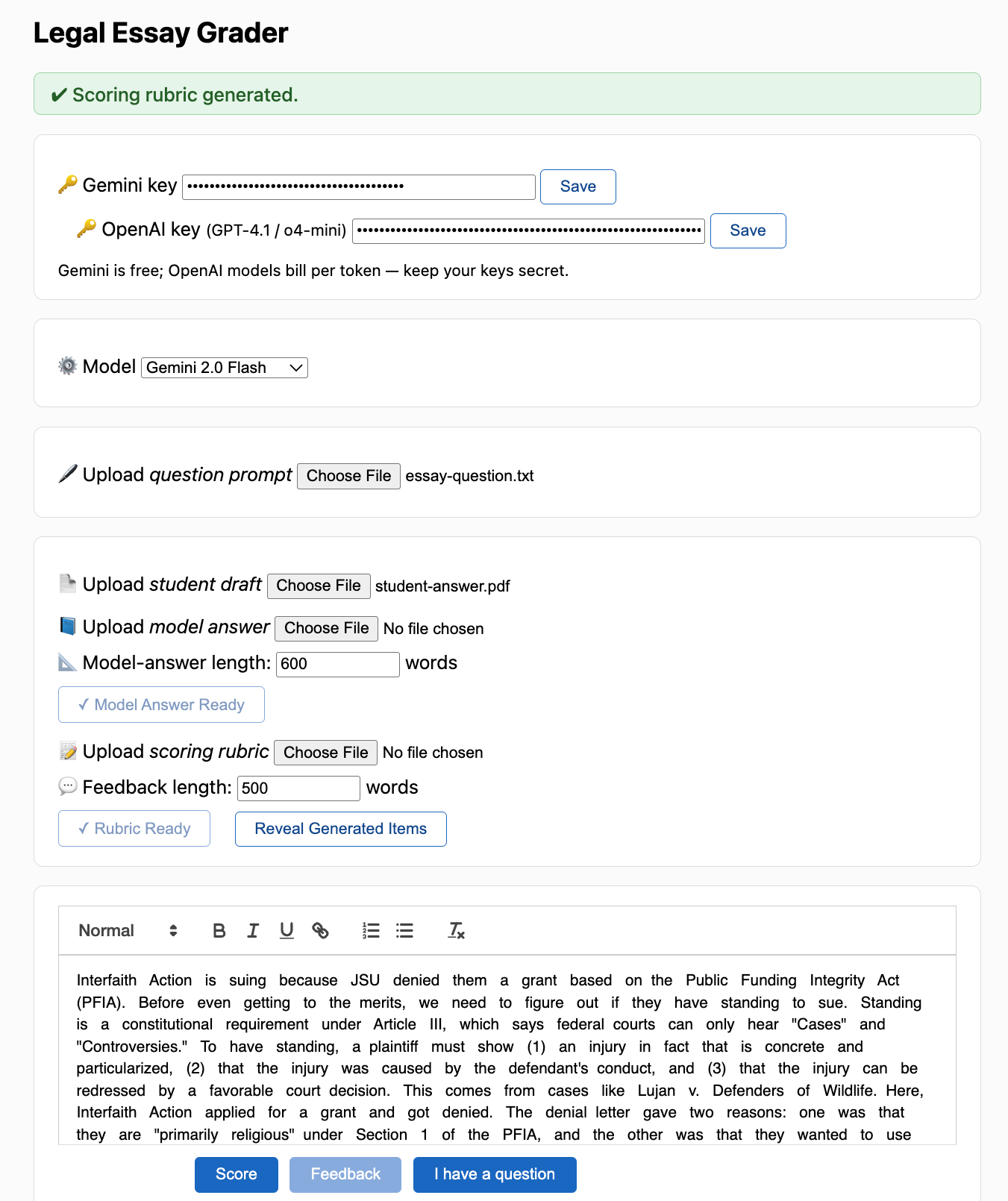

What if, however, there were a free app that organized the prompt structure for you and automatically submitted a correct and complete prompt to an AI for you? What if from within the app you could also ask questions of the AI (e.g. "why wasn't the dormant commerce clause thought relevant") and the AI would know about your scenario: question, draft answer, model answer and scoring rubric. Subscribers to legaled.ai, now have such a web app available. Click here to start using it. And here's a screen shot of the user interface.

Instructions on using the Legal Essay Grader web app

Using the “Legal Essay Grader” web app

Why this app?

Legal writing assignments are usually graded after the deadline, when you’re past the point of revising. The grader solves three pain-points:

- Instant feedback – see a score while you can still rewrite.

- Transparent rubric – understand exactly where points come from.

- Safe iteration loop – edit ➜ rescore ➜ refine until you like the number, then request detailed feedback.

1 · Get (and protect) your API keys

| What you need | Where & how to get it | Store it | Cost |

|---|---|---|---|

| Gemini API key |

1. Visit Google AI Studio

→ API keys. 2. Click Create API key → copy the string beginning AI….3. Paste it into the app’s Gemini key box and click Save. |

Keep it in a password manager or an encrypted note—just like a password. | Free |

| OpenAI API key (for GPT-4.1 & o4-mini) |

1. Log in at platform.openai.com. 2. Under Billing → Payment methods add a card or Apple Pay (a $5 deposit is ample). 3. In API keys click + Create new secret key → copy the key starting sk-.4. Paste it into the app’s OpenAI key box and click Save. |

Guard it like a password; anyone with the key can spend your credit. | Pay-as-you-go (≈ $0.015 / 1 k output tokens) |

You only need either a Gemini key or an OpenAI key. You do not need both, though having both will give you more options.

2 · Load your materials

- Required – upload the exam prompt and your draft answer (PDF, DOCX, RTF, TXT all okay). You can also paste and edit directly in the built-in editor.

- Recommended – upload a model answer and a scoring rubric from your professor. These make grading far more realistic.

- No human model answer/rubric? Click Generate Model Answer / Generate Scoring Rubric. Remember: AI will then be grading an AI answer against an AI rubric. The AI might have different emphases than your professor.

3 · Choose a model

| Engine | Speed | Cost (score + feedback) | When to use |

|---|---|---|---|

| Gemini 2.0 Flash | ⚡ 2-5 s | $0 | Quick sanity-check while drafting |

| Gemini 2.5 Flash | ⚡ 40-60 s | $0 | Decent quality |

| Gemini 2.5 Pro | 60 s | $0 | Bigger prompts or tougher reasoning |

| o4-mini-2025-04-16 | 60 s | ≈ $0.02 | Cheap iterative runs |

| GPT-4.1-2025-04-14 | 45-60 s | ≈ $0.10 | Highest accuracy & 128 k context |

The app always allows up to 32 000 output tokens—more than enough for a memo-length critique.

4 · Optional generators

- Model-answer length drop down selector – only applies if you click Generate Model Answer.

- Feedback length drop down selector– default 500 words (≈ 650 tokens); move up if you want a deeper critique.

5 · Scoring workflow

- Click Score – returns 0-100.

- Edit your draft, re-score as many times as you like.

- When happy, click Feedback for a narrative explanation.

Fast models score in seconds; GPT-4.1 feedback can take up to a minute for very long outputs.

6 · About costs & the lock-out timer

- Gemini is free for now. ChatGPT from OpenAI is not but it is still quite inexpensive, particularly relative to other assistance options. Example bundle (~6 k tokens input + 1 k feedback) → ≈ $0.015 (o4-mini) or ≈ $0.075 (GPT-4.1).

- The 3-second lock-out prevents accidental double-billing and gives the model time to stream output.

7 · Reality check

LLMs aren’t your professor. They have different blind spots and different levels of patience. Use the numeric score as a thermometer and the feedback to spot missing issues or weak analysis. Iterate until you feel confident.

How a law student can leverage the Legal Essay Grader

Success on law school essay exams usually turns on two inputs: doctrinal mastery and the craft of rapid, organized writing. The Legal Essay Grader web-app gives students a low-friction laboratory in which to hone that craft, using the same large-language-model technology now embedded in commercial research tools. Here is a concrete way a student in Constitutional Law can deploy the grader in the fortnight before a graded formative assessment.

The professor announces that the mid-semester "formative assessment" will include one 30-minute essay on either federal judicial power, preemption and/or the dormant commerce clause. You retrieve last year’s released prompt: “The State of Franklin enacts the 'Legal Harvest Act,' banning wheat grown outside Franklin from being sold within its borders unless the seller certifies to the state that it was harvested by persons lawfully present in the United States. Farmer Olsen, an Oregon grower, sues Franklin, alleging the statute violates the U.S. Constitution. Discuss the constitutional issues.” In a Word file you outline your first draftand upload both the prompt and your answer into the app. The professor doesn't provide a model answer, so you either ask the AI to generate one for you or you collaborate with study friends to create one, which you then upload. You also upload the professor’s rubric (16 pts structure, 24 pts precedent use, 20 pts policy analysis, 40 pts reasoning). Don't look at the rubric. That defeats the whole purpose. If the professor isn't willing to release a scoring rubric, you ask the AI to create one for you. If you ever do want to see the model answer or scoring rubric created by the AI, you can press Reveal Generated Items and they will appear. You can then assess whether the problem with your essay score lies mostly with your work or mostly due to some strange answer or scoring system of the AI. Don't blame the AI too swiftly, however!

First run. Choosing Gemini 2.0 Flash you click Score. Five seconds later the model assigns 58/100. As it happens, the AI dinged you for failing to distinguish “discrimination in purpose” from “incidental burden” and failure to spot the preemption issue. But you don't know that yet because you haven't asked for feedback. You now wonder if maybe there was a preemption issue in the question. After all, you recall that immigration is usually handled at a federal level. You go into the built in word processor within the app and add this to your essay. "The “Legal Harvest Act” imposes a new sanction/condition—documentation proving lawful-status labor—as a prerequisite for selling out-of-state wheat. Under § 1324a(h)(2) (as interpreted in Whiting) only “licensing and similar privileges” can be conditioned by states; Franklin’s outright market-access ban is neither a licensing measure nor consistent with the federal Form I-9 system. Moreover, by creating its own verification documentary regime, Franklin stands as “an obstacle to the full purposes and objectives” of IRCA (Arizona), occupying ground Congress reserved for itself." You press the score button and smile as the score increases to 74. So you sense that including immigration was a good idea but there might yet be problems with your response.

Deep dive. Now you switch the model picker to GPT-4.1, knowing each call costs a few pennies but yields tighter reasoning. You lock in the improved answer and click Score again. GPT-4.1 returns 76, confirming Gemini’s ballpark. Satisfied that the baseline is solid, you press Feedback (500-word option). The app responds with a detailed critique of your performance and associates the critique with the score you received. You learn that your dormant commerce clause analysis wasn't strong and that you needed to discuss National Pork Producers Council v. Ross. You also learn why your preemption analysis was shallow.

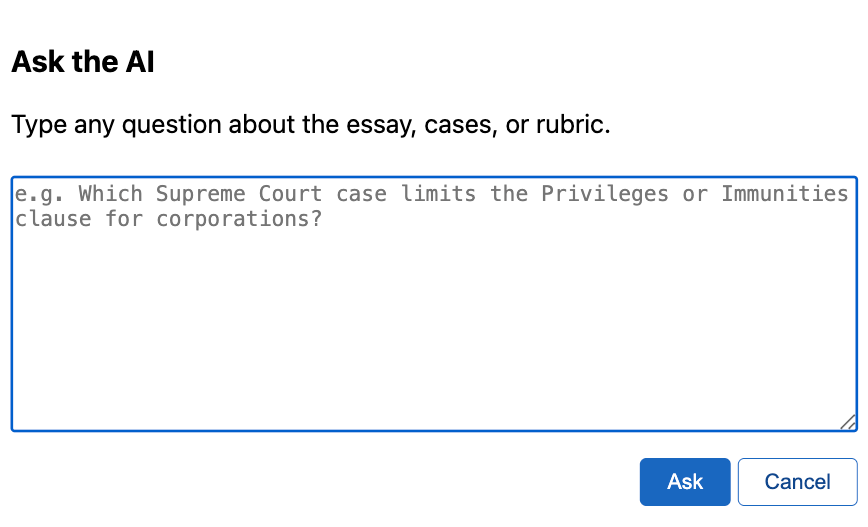

Targeted Q&A. Next, you press the I have a question button. A window opens. It looks like this.

You overwrite the default and say you don't understand why National Pork Producers was so important. Within 30 seconds, the AI responds with something like the following: “National Pork Producers Council v. Ross (2023) matters because it supplies the Court’s latest guidance on three DCC points. First, it collapses the old extraterritoriality test into ordinary Pike balancing, so any law with upstream effects—like Franklin’s certification rule—must be filtered through that lens. Second, it clarifies that moral or policy goals can qualify as a “legitimate local interest,” though discrimination still triggers near-per se invalidity. Third, the opinions show where the current Justices sit on balancing versus categorical bars. Citing Ross proves you grasp the contemporary framework and can distinguish Franklin’s facially discriminatory statute under modern Dormant Commerce jurisprudence."

Rinse and Repeat. You can repeat this loop not only on this prompt but on other prompts you find either in books, the internet, or other professor's tests. Alternatively, you can give AI a copy of your syllabus and tell it to draft an essay question that addresses some subset (perhaps three issues) studied in the class so far. You can then have it draft a model answer and scoring rubric. You can then feed these into the AI and go through the learning experience.

Acknowledging the Placebo Effect

Will the AI’s feedback mirror your professor’s line-by-line? Probably not. On the other hand, few professors I know of will give you a line-by-line critique of a practice essay response. (Or they will sneakily use AI to do so!) Yet the very act of soliciting and reading machine critique stimulates deliberate practice. Timely, specific commentary—regardless of its ultimate accuracy—primes students to rewrite, clarify, and tighten argumentation. That “nudge” itself should boost performance even when the feedback is imperfect. Think of the grader, therefore, not as an oracle whose numbers dictate your fate but as a sparring partner that keeps you writing. The psychological lift of instant interaction, the safety to experiment, and the discipline of a visible score together create at best an insightful tutor and at worst productive placebo. Either way, you work harder and smarter because the machine is watching.

Bugs and Features

It would not shock me if this app (which I guess I should call a beta version) had bugs. This one was not dashed off in a morning. It took a lot of work to figure out how to access different models and to get the user interface right. So, if you find problems, email me at aiforlegaled @ gmail dot com. Also, if you have idea for features that would enhance the application without making it overly complicated, let me know and I will see what I can do.